Cloudy with a chance of data loss

Oracles has lost SSO credentials, hashed passwords, OAuth2 keys and customer data, but continue to deny it.

In March 2025, something quietly catastrophic happened inside Oracle.

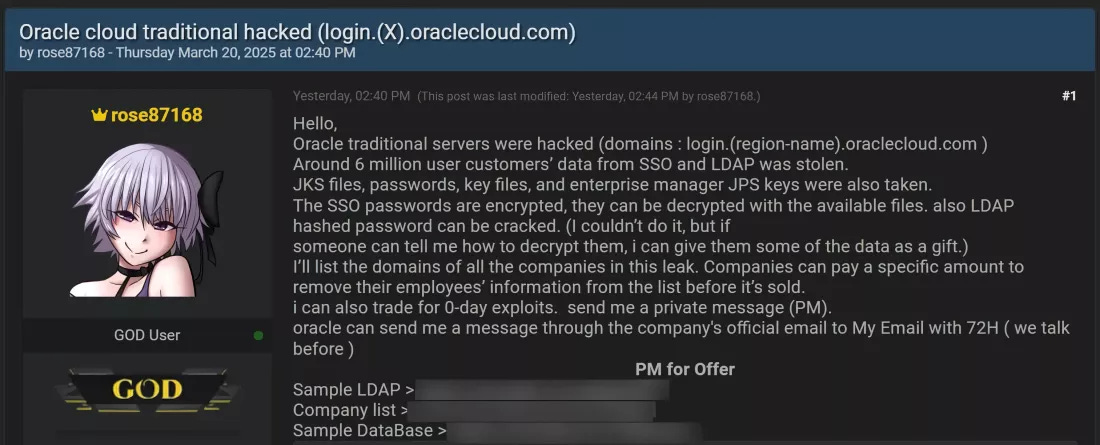

A hacker, using the alias Rose87168, broke into Oracle’s cloud identity platform, the very system that manages logins for some of the world’s largest enterprises, and stole a cache of data affecting over 144,000 businesses.

We’re talking hashed passwords, SSO credentials, OAuth keys, and internal customer identifiers. Everything you’d need to impersonate accounts or move laterally through a company’s cloud infrastructure.

And then, in a move ripped straight from a Netflix script, the hacker offered those companies a choice:

Pay up to have your data removed. Or watch it get sold to the highest bidder.

The cover-up that wasn't

When news of the breach started circulating, Oracle’s response wasn’t swift. It was strategically silent.

Publicly, the company denied anything had happened. They claimed their cloud systems were intact. No breach. No issue.

But behind closed doors, some customers were told a different story. That the breach hit Oracle Cloud Classic, a “legacy” platform, separate from the company’s current offerings. This semantic dodge allowed Oracle to maintain plausible deniability in the media, while trying to contain the fallout internally.

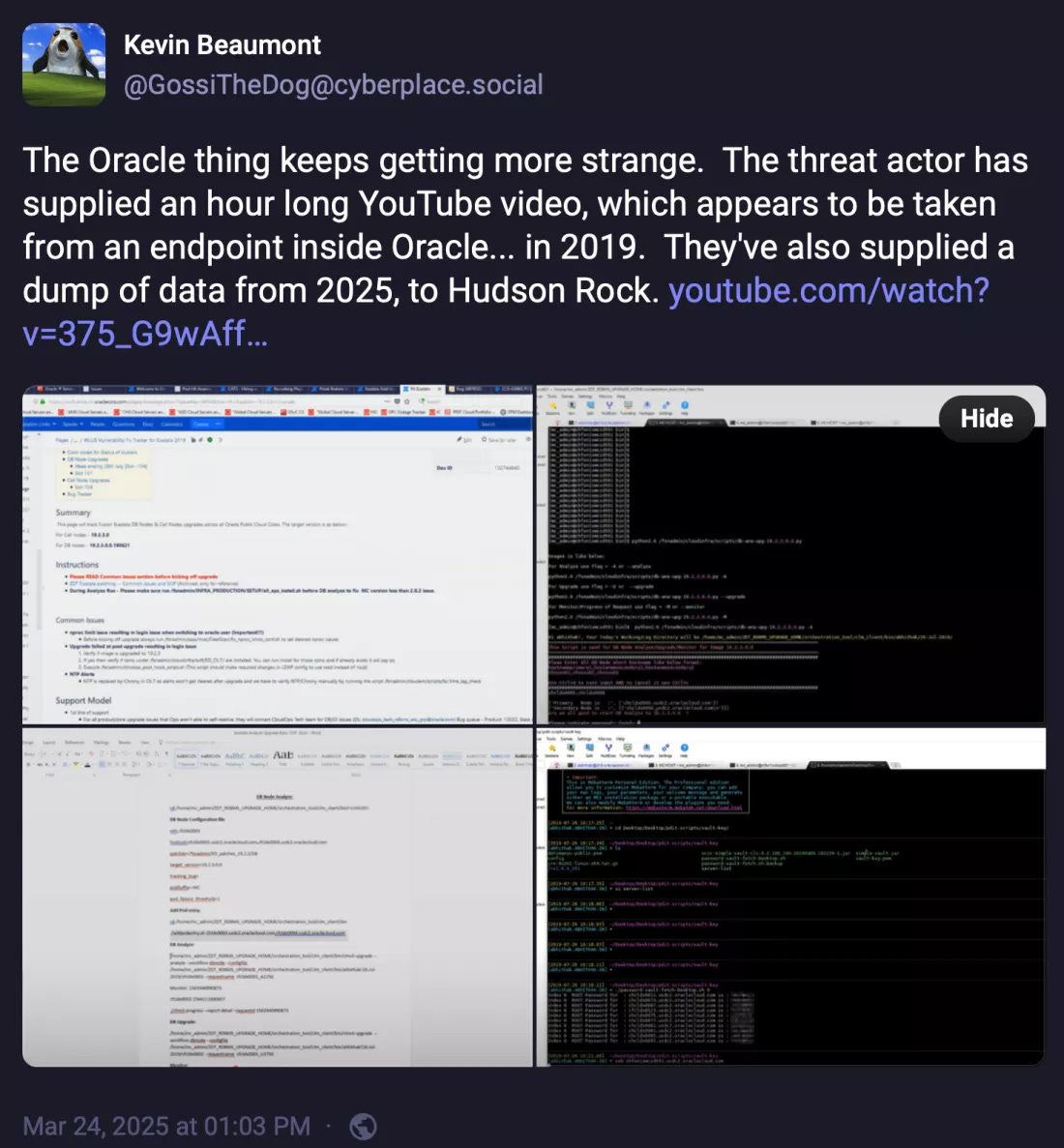

Unfortunately for Oracle, cybersecurity researchers weren’t buying it. Firms like Hudson Rock and CloudSEK validated the leaked data as authentic, confirming that credentials, tokens, and records were real and recent. Some entries dated up to 2024.

So, what we had was a top-tier cloud provider compromised… denying it… while third-party security analysts proved otherwise… as the hacker started leaking the stolen data via the dark web.

Why this matters to every business

If you’re reading this and thinking, “We don’t even use Oracle,” hit the brakes there partner.

This incident has less to do with Oracle itself and more to do with the fragility of trust in your tech stack. Because what happened here could happen to any cloud provider. And when it does, the blowback isn’t isolated, it ripples out to customers, partners, and anyone downstream.

Here’s why this breach hits hard:

Cyber Attack & Credential Theft: This wasn’t just a hack. It was an infiltration of a cloud identity system, the backbone of authentication for thousands of businesses. If those credentials get cracked (and yes, the hacker is crowdsourcing help to do exactly that), this turns into a launchpad for a second wave of breaches.

Third-Party/Supplier Risk: Oracle wasn’t breached because of your vulnerabilities. But their breach just became your problem. This is classic supply chain disruption, where your risk exposure is dictated by someone else’s negligence.

Operational & Reputational Fallout: If a hacker gains access to your cloud environment through compromised SSO keys, it’s game over. Downtime, data loss, and reputational damage are all in play, not to mention legal exposure if customer data gets swept up in the chaos.

What can you do?

Here’s what resilience looks like in practice:

1. Identify Exposure

Check if your organisation appears in the leaked company list. Reach out to Oracle (yes, even if they’re cagey). Engage your threat intel providers. If you’re in the blast radius, assume compromise.

2. Reset and Rotate

Change every credential tied to Oracle cloud logins or services. Rotate OAuth keys, service account credentials, and any shared secrets. Reset admin-level accounts first. And if you're still not using MFA across the board, now is the time to fix that.

3. Monitor Like You're Under Attack

Because you might be. Set up alerts for unusual logins, geographic anomalies, or volume spikes in data access. Watch for signs of credential stuffing or brute force attempts. And don’t just look at Oracle services, think about where those credentials might also be reused (your own bad hygiene is part of the equation).

4. Pressure Your Vendors

This is a transparency test. If your suppliers can’t provide clear, timely updates in a crisis, you need to reconsider who you’re partnering with. Review your contracts. Push for stronger SLAs around breach notifications and data handling.

5. Update Your Playbooks

Most incident response plans don’t account for vendor-originated breaches. That needs to change. Build scenarios for this kind of event, what would you do if Google Workspace, AWS IAM, or Microsoft Entra got hit next?

The bigger picture: Your resilience isn’t yours alone

We talk a lot about building “invisible” businesses. Ones that keep running no matter what hits them.

But if Oracle can be breached - and hide it - then the reality is that your resilience can be impacted heavily by the vendors you trust. And sometimes those vendors treat transparency like a liability.

This breach is more than a cybersecurity headline. It’s a reminder that resilience isn’t just about backups and firewalls, it’s about governance, contracts, and visibility. The boring stuff no one wants to do… until it’s too late.

So don’t wait. Check your exposure. Rotate your keys. Tighten your posture.

And next time someone tells you, “Don’t worry, it’s just a legacy system,” maybe… worry a little, especially if their name is Oracle.

Sources: