How Millions of Abandoned AI Agents Are Operating in Enterprise Systems with Full Access to Your Data

Self-operating AI Agents are becoming the largest unmanaged risk in modern enterprises, and no one's talking about it.

Mark Zuckerberg confidently predicts,

we're going to live in a world where there are going to be hundreds of millions or billions of different AI agents eventually, probably more AI agents than there are people in the world.

Meanwhile, Microsoft CEO Satya Nadella acknowledges that "the unintended consequences of automation — displacement — are not going to be real as well," while revealing that 30% of Microsoft's code is already written by AI. Even Google's Sundar Pichai admits more than 25% of new code at Google is AI-generated, while simultaneously telling his workforce "the stakes are high" for 2025.

You may have seen the ramblings and hype of AI Agents on your social and news feeds recently. The learning curve on technology and developer tools reduces daily, and as a result people are able to create their own AI Agents with ease.

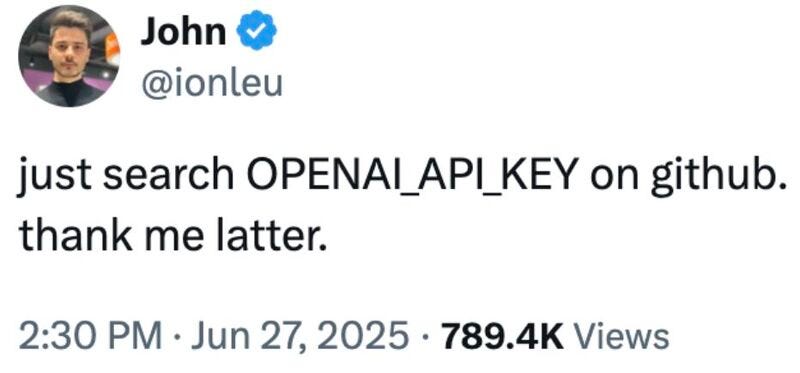

The below helps paint the picture of why this can be bad for the rest of us.

Maybe don’t follow John’s advice here, but do consider what this means for cyber security risks. He is alluding to the fact that an API key typically uses tokens (in this case through OpenAI) to run programs or prompts. Each token costs money, so if you can get hold of someone elses API key, then you have access to as many free tokens as you want.

99% of organisations experienced API-related security incidents in 2024, while 39 million exposed secrets (sensitive authentication credentials like API keys, passwords, access tokens, and other digital keys that grant access to business systems and data) are sitting in public code repositories right now.

Meanwhile, abandoned AI agents continue making autonomous decisions and accessing sensitive data long after teams have moved on to shinier projects. API data breaches jumped 80% year-over-year, with the average cost hitting $4.88 million per incident.

Agents are only becoming more popular and easier to build. How we manage the risks associated with them has never been higher.

APIs: The invisible backbone some forgot to secure

Think of APIs as digital translators between software systems. When you click "Sign in with Google," an API handles that conversation behind the scenes. Simple enough, right?

But, I assure you it does get more interesting than that, particularly for risk managers.

Organisations now manage an average of 421 APIs, with enterprise sites processing 1.5 billion API calls monthly. 62% of companies report their APIs generate direct revenue, transforming them from simple connectors into profit centres.

But the problem is that API keys are like master passwords for machines. Longer, more complex, and embedded directly in code. This makes them incredibly powerful and devastatingly dangerous when exposed.

GitHub has become an unintentional showcase of this vulnerability. Developers routinely embed API keys in source code, configuration files, and documentation on the site, then commit these files to public repositories. Even when they delete exposed keys later, Git's revision history preserves them forever. I’m sure you can imagine the issues here.

Security researchers exploit this with predictable search patterns, like this one:

"sk-" AND (openai OR gpt)

"OPEN_API_KEY" AND githubThe scale is staggering: GitGuardian detected 13 million secrets leaked via public GitHub repositories in a single incident. One exposed Stripe API token was worth $17 million in potential fraud exposure. And with the rise of vibe coding - the act of creating applications with AI without any developer skills - is on the rise.

AI never clocks out

Traditional software waits for human input, executes tasks, and stops once done. The most valuable and attractive aspect of AI Agents is that they maintain persistent connections, store conversation context, and make autonomous decisions without human oversight. It’s this opportunity that is changing the face of business as we know it. Where we once cheaply outsourced to an opposite timezone so that our business was active 24/7, we can now do this cheaper and indefinitely.

But there is a nightmare scenario at play. An AI Agent deployed for customer service remains active after the team pivots to other projects. The Agent keeps API connections to customer databases, payment systems, and communication platforms. It continues processing requests, making decisions, and accessing sensitive data, operating in what Microsoft's AI Red Team calls a "forgotten deployment".

Unlike static applications, AI agents can process new inputs and modify behaviour autonomously, maintain active API keys across multiple enterprise systems, store persistent memories that influence future decisions, and execute commands across interconnected business systems.

The most dangerous scenario are agents that are simultaneously externally accessible and internally connected. Imagine an AI agent exposed through your company website while maintaining back-end access to databases and cloud infrastructure. (A common example are simple AI chatbots on your website that connect through to your helpdesk and CRM.)

Real incidents prove this isn't theoretical. Samsung engineers used ChatGPT to debug proprietary source code, inadvertently submitting intellectual property to external systems. The company immediately banned generative AI tools company-wide (for now).

The AgentSmith vulnerability on LangSmith's platform was even worse. Malicious AI agents pre-configured with proxy servers intercepted user data, capturing OpenAI API keys, prompts, and uploaded documents from multiple organisations. The vulnerability scored 8.8 on the CVSS scale. For context, the ConnectWise ScreenConnect vulnerability that hit organisations worldwide in early 2024 scored a perfect 10.

An organisational train wreck

At some point all cyber issues are the result of human error. The root cause isn't technical, it's organisational. It’s the fundamental internal policies and culture that set digitallty resilient comapnies apart.

96% of employees now use generative AI applications in some form (up from 74% in 2023), while 38% admit to sharing sensitive work information with AI tools without employer permission. Meanwhile, 63% of enterprises fail to assess AI tool security before deployment.

This creates "shadow AI", unauthorised implementations that bypass IT security controls. Marketing teams deploy AI customer segmentation tools processing sensitive data, HR uses AI resume screening without validation, and engineering integrates code generation assistants exposing proprietary algorithms.

The Microsoft research team incident of 2023 exemplifies these failures. They exposed 38 terabytes of private data including passwords and secret keys through misconfigured cloud storage during AI research. Not malicious intent, just absent security protocols.

Similarly, Lowe's Market exposed AWS tokens through publicly accessible environment files, allowing access to credit card information and customer addresses. 3Commas suffered a $22 million theft when 10,000 API keys were dumped on Twitter.

McKinsey research shows CEO oversight of AI governance correlates most strongly with bottom-line impact, yet almost 50% of board directors say AI isn't on their agenda. Well, it should be. And this is the problem. When leadership aren’t taking this seriously, employees who use AI to help in their work are less likely to take security seriously either.

What to do: Building real defences (not security theatre)

All this can seem overwhelming. Where does one start, and what if IT security isn’t your area or responsibility? I find one question to ask if what external systems are we connecting to, and how?

An IT professional would always begin by reviewing the immediate technical actions. This means conducting automated API discovery to identify all APIs, including forgotten "zombie" APIs. Research shows approximately 350 secrets are exposed per 100 employees annually, making comprehensive scanning essential.

Developers should be scanning source code repositories for exposed API keys, audit key storage locations, and immediately revoke any exposed credentials. Implement emergency rate limiting on high-risk endpoints.

API key management requires actual security controls. You can generate keys using cryptographically secure methods and store them using AES-256 encryption in dedicated services like AWS Secrets Manager or HashiCorp Vault. You should apply least privilege principles, each key should access only what it absolutely needs.

Another common technique is to rotate keys automatically every 30-90 days based on risk level. Maintain comprehensive audit logs tracking all key activities.

AI agent security demands specialised controls. Your organisation should be deploying agents in sandboxed environments with network restrictions and granular permissions. Implement circuit breakers that halt activity when exceeding thresholds, and maintain human oversight for high-risk operations.

Use real-time behavioural monitoring and anomaly detection. Content filtering blocks prompt injection attempts, while comprehensive input validation protects against malicious inputs.

And finally, policy frameworks must address governance gaps. Adopt established frameworks like NIST's AI Risk Management Framework. Create cross-functional AI governance committees with clear authority and accountability.

The bottom line: AI Agents are a bad actor’s best friend

This convergence of AI adoption and API proliferation has created unprecedented security challenges. With 99% of organisations experiencing API security incidents and 39 million exposed secrets in public repositories, the crisis is already here.

Abandoned AI agents in forgotten deployments represent ticking time bombs, combining persistent access with autonomous decision-making in ways traditional security models never anticipated.

Organisations that recognise this as a strategic business issue requiring executive leadership—not just an IT problem—will leverage AI's benefits safely. Those waiting for their first major incident will find themselves explaining to stakeholders why they didn't act when the warning signs were flashing neon bright.

The choice is simple: Invest in comprehensive API and AI security governance now, or prepare for serious crisis management later. Your call.